Generative Artificial Intelligence (AI) is undergoing rapid development and threatening to upend norms on the internet and in classrooms. Generative AI programs such as ChatGPT can write text (and other data) that is largely indistinguishable from text generated by humans. Unlike humans, however, AI can generate text at volumes and speeds that are many orders of magnitude larger than what a single human could achieve.

At the same time, AI-generated text is of variable quality. There is a risk that AI-generated text will come to dominate online text and discourse while drowning out text generated with the thought and attention of actual humans. This has the potential to drown out the voices, opinions, and thinking of real humans while simultaneously enabling the proliferation of online deception and manipulation (for example: fake news or fake opinions). A growing public concern is that generative AI can be used to cheat on essay assignments in education and evaluation settings, impersonate a human in validation or authentication security controls, or be misused for other unethical or even illegal reasons.

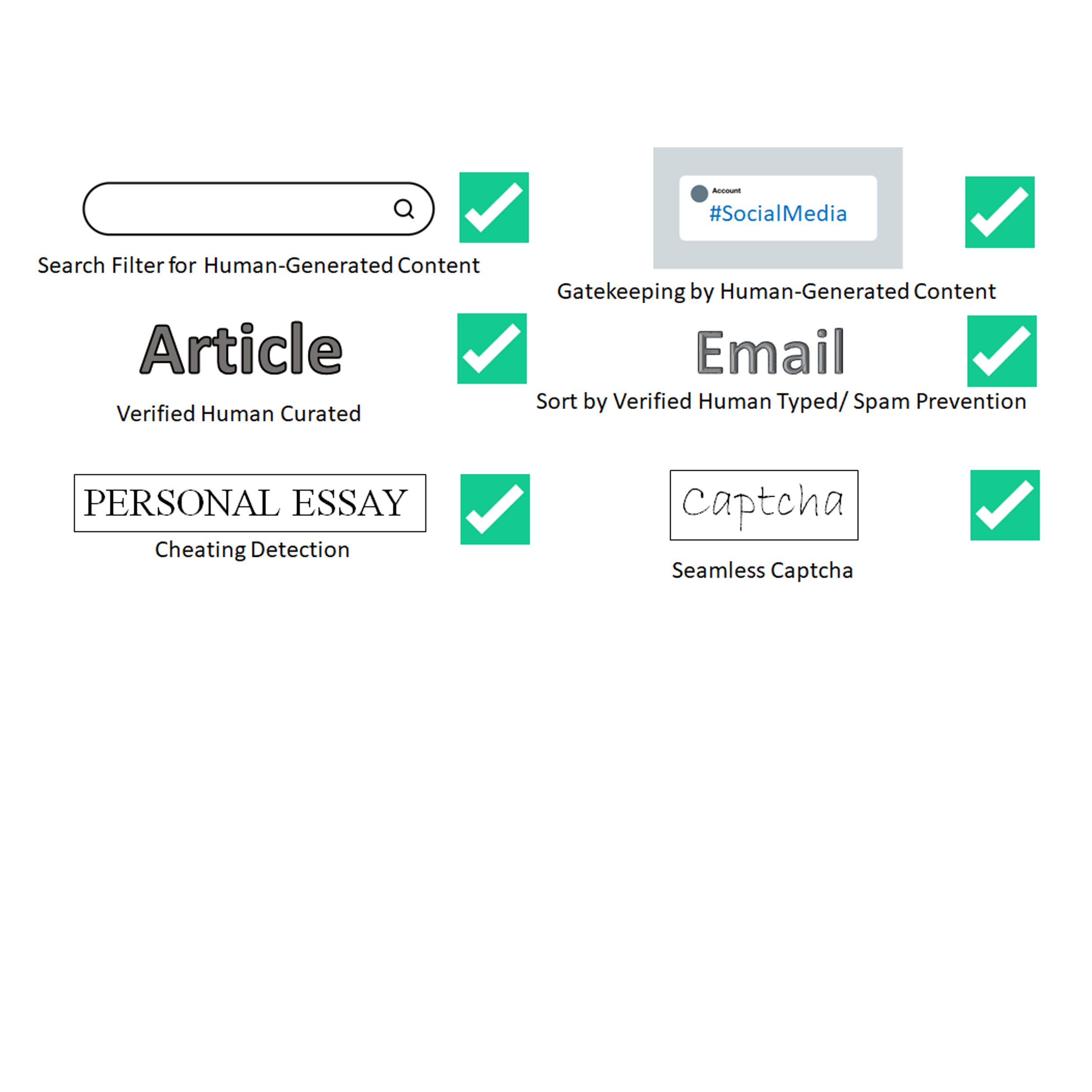

LLNL has invented a new system that uses public key cryptography to differentiate between human-generated text and AI-generated text. This invention can be used to validate that text is likely to be human generated for the purposes of sorting or gatekeeping on the internet, can detect cheating on essay assignments, and can be used as an automatic captcha that does away with the hassle of traditional captchas.

- This invention validates that typed data (text) is likely to have been generated by a human and not by a generative AI. This validation process produces an anonymized digital signature to attest to the fact that the text was human generated. The digital signature can be attached to the text as metadata and verified by third parties. The digital signature can also contain data related to the timespan and history over which the text was typed, the app or context in which the text was typed, and in certain applications identifying information about who typed the text.

- The digital signature verification can be easily checked by third party websites. Websites can then use this verification that the text was likely human generated to prioritize, curate, or gatekeep (e.g., prevent AI generated text from getting in).

Image Caption: LLNL’s invention can be used to anonymously certify that typed data was likely generated by a human. This certification can be used in third party applications for filtering, gatekeeping, vetting, detecting cheating, etc.

The primary advantage of LLNL’s novel system is that it enables validation that text was human-generated. This advantage can be used to implement new cybersecurity controls and to build trust in generative AI applications.

- Human-verification for authentication and identity management systems, with added features of auto-generated digital signatures if desired

- Gatekeeping for operational technologies and control systems

- Detecting AI-generated text, such as for education, evaluation purposes or journalism industries

- Automates human-input verification for search engines and web forms (instead of the hassle typically associated with current captchas where picture puzzles must be solved).

- Works in a fully anonymized fashion. It can also be linked to a fingerprint scanner or other biometrics (e.g., keystroke dynamics analysis) in select applications where verified identity is required.

Current stage of technology development: TRL 2 (March 2023)

LLNL has filed for patent protection on this invention.